At 00:58:53 UTC, December 26, 2004, a subduction created 9.1 magnitude earthquake struck off the West Coast of Sumatra. The event was recorded on various seismographs throughout the world and the Pacific Tsunami Warning Center issued an bulletin at 01:14 UTC, December 26, 2004 - http://www.prh.noaa.gov/ptwc/messages/pacific/2004/pacific.2004.12.26.011419.txt. The bulletin was followed at 02:04 UTC, December 26, 2004 - http://www.prh.noaa.gov/ptwc/messages/pacific/2004/pacific.2004.12.26.020428.txt upgrading the magnitude estimate of the earthquake from 8.0 to 8.5. Later, the same center issued subsequent bulletins (December 27, 2004) for the Pacific Basin updating shore areas as to upwards of a half meter's difference in sea level from crest to trough.

The Indonesian Tsunami event resulted in an estimated 230,000 casualties and is an example where a form of evaluation, in this case observational assessment, could have saved lives.

In 1949, the Pacific Tsunami Warning Center was established as a reaction to the 1946 Aleutian Island Tsunami that was tied to 165 deaths between Alaska and Hawaii. The system is based initially on seismic data, which was all that was available to the center at the time of the 2004 Tsunami. No similar center existed in the Indian Ocean with the closest center being in Japan. However, the initial magnitude of the quake did indicate to some individuals that a significant event would occur in the Indian Ocean.

At this point this blog will move from fact to a bit of conjecture as I am unable to find actual evidence that the following is true, but certainly seems plausible.

Apparently, NOAA (National Oceanographic and Atmospheric Administration), a United States Department of Commerce agency attempted to contact governmental officials for countries which border the Indian Ocean to provide warning. Further, as the Tsunami made its way across the ocean, the warning was passed from government to government. Unfortunately, a method to effectively and efficiently disseminate this critical information was not in place and hundreds of thousands of people were caught unaware, with the first warning either being the serious reduction in sea level due to the preceding trough or the froth of the crest on the horizon.

Be that as it may, there are two major facts of this tragedy:

1) Incomplete evaluation systems were available to assess the issue (NOAA knew there was a possibility for a Tsunami, but had no idea as to intensity or direction).

2) There was no dissemination plan for the emergency.

The United Nations has taken steps to ensure that the "evaluation" of sea level be implemented consistently. There are plans for similar systems to be set up in the Mediterranean Sea, North Eastern Atlantic, and the Caribbean Sea. The expectation is that major communities can be given warnings that will allow for timely evacuations. The issue still is dissemination and it remains to be seen how effective the program will be.

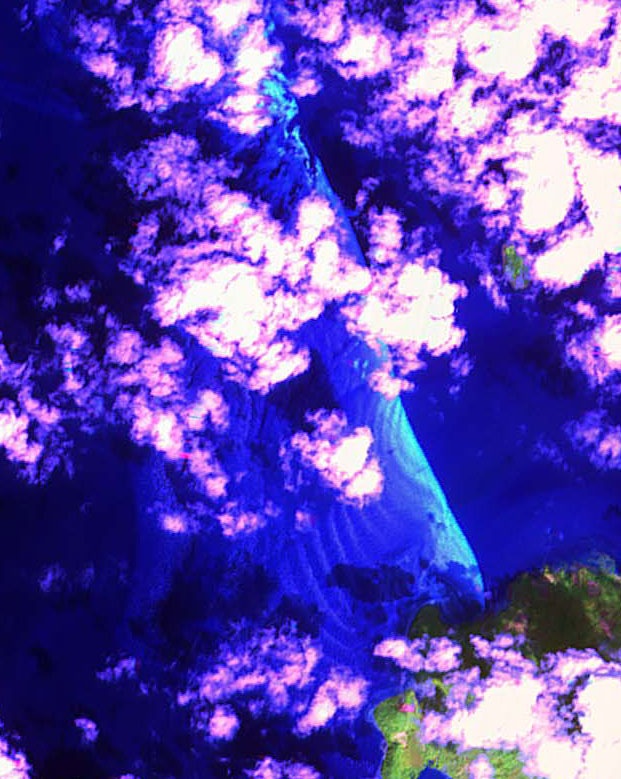

There is an even more striking "measure" that wasn't discovered till recently, that jarred my thoughts around evaluation. The Centre for Remote Imaging, Sensing and Processing recently made available images that they unearthed when reviewing satellite imagery on the date of the Tsunami. As can be seen in the attached images (http://www.crisp.nus.edu.sg/tsunami/waves_20041226/waves_zoom_in_b.jpg , http://www.crisp.nus.edu.sg/tsunami/waves_20041226/waves_zoom_in_c.jpg), the waves from the Tsunami were clearly visible - giving a sense of intensity and direction of propagation.

The system, which had the trip wire measure of the seismograph could have enhanced information from the satellite images. But, again the "evaluation" would have been useless as it would not be presented to the individuals who would have most benefited from it. Instead, the world who was connected, watched helplessly as the waves crossed the Indian Ocean and inundated the coastal communities, killing hundreds of thousands of people.

Being an evaluator, I take home two critical thoughts from this:

1) Evaluation of systems is very important, in some cases, it just doesn't describe a program, but can in fact save lives.

2) Evaluation is only good if it is placed in the hands of the people who need the information to inform their decision making.

Evaluation can serve as a warning of impending doom for a program.

Granted, the earthquake and subsequent tsunami was a very low risk for the region, even given the fact that the region rests in a very tectonically violent area. The UN has "learned its lesson" and is implementing a system in the region, deploying evaluation after the program of safety for the population in the area failed (reached a very negative outcome). Today, nonprofits as well as for profit businesses, funders, and the like (really all of us) have received tremors that might indicate a larger earthquake that will crush us further. Even given the fragility of economies, knowing where the program is going and what is going well (and conversely, what is not) is critical. In most cases, the programming isn't going to harm individuals and loss of it will not have the same widespread impact as a tsunami, but the metaphor remains. A few key sensors connected to the right stakeholders can forestal major disaster.

As always I'm open to your comments, suggestions, and questions. Please feel free to post comments.

Best regards,

Charles Gasper

The Evaluation Evangelist