Much like the Kuebler-Ross Stages of Grief (http://en.wikipedia.org/wiki/Kübler-Ross_model), many of the teams I’ve worked with have gone through stages that start with “it is too complex” to “yes, that is what we do and why we do it”. Once we get people to actually agree to engage with us, their models reflect the complexity seen in models such as this.

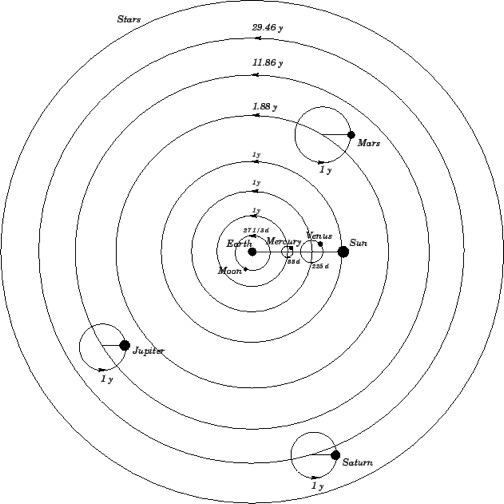

If you remember your high school physics class and had paid attention during any astronomy class, you will remember that the model above found at (http://farside.ph.utexas.edu/teaching/301/lectures/node151.html) is the Ptolemaic model. To make the complexity of the model work with Earth in the center, the planets need to orbit around a central point as that central point orbits around the Earth in a direction opposite that which earth rotates. The machine necessary to model this looks like something like this, found here (http://remame.de/wp-content/uploads/2010/03/astrolabe_2.jpg):

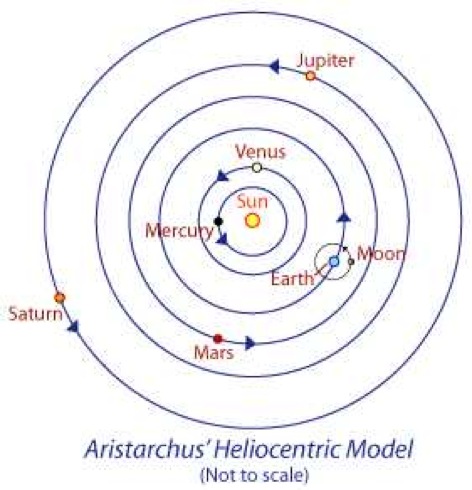

Note the complexity of the gearing and process to model the complexity. However, there is another step – moving to a simpler model and that requires the team to take a step back and not have a geocentric viewpoint of their own program or organization, but rather to try to look at everything a little bit different. In the case of astronomy, opening to the notion that the center of gravity for our local solar system resulted in a simpler model – something less complex found here (http://biology.clc.uc.edu/fankhauser/classes/Bio_101/heliocentric_model.jpg)

and results in simpler mechanics as can be found here (http://www.unm.edu/~physics/demo/html_demo_pages/8b1010.jpg)

The simpler model allows for a more accurate representation of what is actually happening and then allows for corrections such as the fact that the planets do not orbit the sun in a perfect circle. In organizations and programs, similar moments of clarity allow the team to test deeper assumptions and improve their associated projects.

Now, let’s be honest organizations and their programs, much like true orbital mechanics aren’t simple – there are layers of complexity. However, there is true complexity and there is complexity driven by poor assumptions or inability to stop and look at things objectively. The role of the evaluator is to help break down these viewpoints and help the team see through the complexity they have invented due to their preconceived notions to help them see the true underlying mechanics of their work and its outcomes. The process isn’t easy and in some cases, I’ve found that the work I do is more like a therapist than like a researcher. There can be displays of frustration and anger as the team works its way through understanding their organization or program. And much like some therapy sessions, the team can pretend that there is agreement among them when there isn’t – unifying against the evaluator to avoid the pain of the experience and/or the possible pain of discovering that their world view isn’t as clear as they would like. I will write more about process another day, but suffice to say, opening people to other views can be rather difficult work.

So back to the original question, is complexity an excuse or evidence of lack of understanding? I’ve often found it can be both and with that in mind, the wise evaluator, interested in understanding the theories of an organization or program, will continue to try to get their team to “simply” their model of their theory. It is in that simplification that real and difficult discussion occurs that provide insights as to what the organization or program is trying to accomplish and how.

Also, please note that at no point did I say that complexity isn't a part of everything we do - it most certainly is. However, experience would indicate that when we think about what we do and how we do it, our mental models are significantly more complex than reality. Further, our perceptions of what we do and why is often colored by how important we want to feel and how much we desire others to understand how difficult it is to be us. To those of you who fight to help teams tease out the try complexity from the self-generated complexity… To those of you who struggle to bring clarity to a complex world… Thank you!

As always, I'm open to your comments, suggestions, and questions. Please feel free to post comments.

Best regards,

Charles Gasper

The Evaluation Evangelist